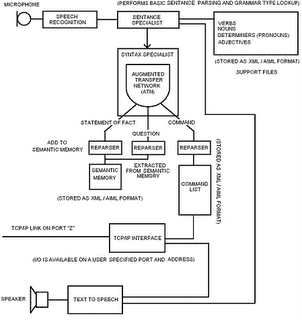

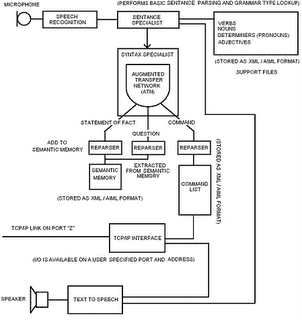

I recently had a look at natural language processing and speech recognition. It makes for an interesting interface between people and machines. I had already experimented with voice control of robots but that was before I was able to program the Microsoft Speech SDK properly.After doing some research I have come up with a possible template for a speech processing NLP module for conversing with and controlling a robot. Im hoping the system will be capable fo the following:- Distinguishing between conversation types, Question, Statement and Command

- Acquiring semantic knowledge by parsing Statements.

- Retrieving facts from semantic knowledge base by answering simple Questions

- Performing Commands by comparing what was said against a list of acceptable commands

- Asking a user for the definition of a word that does not appear in the lexicon and updating it

To do this Im planning to save semantic memory in the form of human readable files which can be parsed for content. Effectively you can tell the robot whatever you like, you can ask it a question about what you said later too.

For understanding the general gist of the conversation I am using an Augmented transition Network or ATN. This contains a finite state machine whoose states are triggered by the grammer of the word it is parsing. This method is quite fiddley and takes a lot of fine tuning, but you can usually make sense of the sentances spoken.

At this time Ive gotten as far as the ATN section. I'll get around to the rest of it when I get some time.

At this time Ive gotten as far as the ATN section. I'll get around to the rest of it when I get some time.